Title

OSM_Autoscaler

Organization

Description of functionalities

How OSM’s stock autoscaler operates

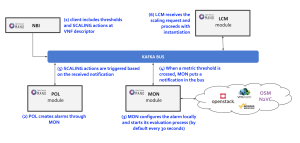

The following figure depicts the high-level architecture of OSM’s (R5) stock autoscaler.

For a VNF that includes a scaling descriptor, each VDU is monitored individually for the selected metric. Based on the retrieved metrics, the stock autoscaler can trigger a scale in or a scale out operation. For example, if at some point in time a VNF employs 3 VDUs with respective CPU loads 62% (VDU1), 93% (VDU2), 80% (VDU3) and the scale-out threshold is set to 60%, then the MON module (see above diagram) will place three scale-out alarm notifications in the Kafka bus. However, if the cooldown parameter is in use within the OSM configuration, only one of these alarms will result to an actual scale-out operation. For the rest of the alarms, the POL module will report that not enough time has passed since the last scale-out operation. Hence, it will skip the scaling request.

In the following, we will answer fair questions that have arosen by the 5GINFIRE consortium in relation with the cooldown parameter and how it affects our implementation and/or its comparison with the OSM default autoscaler which must operate with the cooldown mechanism.

[Q1] Is the cooldown parameter of the default autoscaler something that is thought to be activated/deactivated at will? Or is it something though to be permanently activated?

–> The cooldown parameter is set in the VNF Descriptor level, inside a scaling-group descriptor and is fixed for the whole lifecycle of the VNF, so it cannot be changed for a running VNF.

[Q2] If the former is true, then your comparison is maybe unfair: you are comparing default autoscaler plus cooldown with new autoscaler without cooldown.

–> For the implementation of our autoscaler, it would be required that the OSM code would have to be modified to allow for someone to define the number of VDUs by which to scale in the request towards the REST API of the NBI module. However, this functionality is not supported by OSM R5. Thus, if the above functionality was available, we would also work using the cooldown timer and at the same time scale our VNFs as demanded by our load forecasting algorithms. Therefore, we bypassed the cooldown restriction in order to be able to issue multiple scaling requests, each one scaling (in or out) e.g. one (1) VDU as per the configuration.

[Q3] What would be the result if you used default autoscaler without cooldown?

–> It is not possible to omit the cooldown field from the scaling-group descriptor. We tried that option and we observed tha the POL container crashes, complaining about missing cooldown parameter. However, it is allowed to use a cooldown value of 0, but this solution is not practical for the following reason: Setting the cooldown value to 0 would allow OSM’s default autoscaler to respond quickly to changes. However, remember that OSM monitors each VDU individually; in case of a zero cooldown timer, this would mean that at a certain evaluation interval, OSM could issue a scale-out for a VDU that exceeds the scale-out threshold and a scale-in for a VDU that exceeds the scale-in threshold, both at the same time. These actions would essentially cancel each other and may lead to system errors. The conclusion is that due to the above described behavior of the default OSM autoscaler with a zero cooldown value, it is not feasible to set the cooldown parameter to zero on the default OSM autoscaler.

Further to the above, there is a bug in OSM 5 release due to which, for a VDU that exceeds a scaling threshold there are actually two (2) alarm notifications sent from MON container instead of one. Thus, with a cooldown value of 0 these would result to duplicate scaling requests (since with cooldown equal to 0 all requests go through to LCM unfiltered). This would make the situation even more complecated. We reported this bug in OSM slack channel.

[Q4] I assume that without cooldown in the default autoscaler multiple changes would happen continuously and that everything would end up exploding somehow. If so, just include this type of ideas so that it does not seem unfair in the comparison, and people understand that default approach would just not work without cooldown.

–> This statemenet is very accurate. It would not be possible that the default approach works without a defined cooldown parameter.

How OSM_Autoscaler operates

As explained in the previous section, OSM’s stock autoscaler respects the cooldown timer and thus it has to wait till the next evaluation interval in order to perform any additional scaling actions. However, should we configure the system with the manual scaling option (using the REST API of the NBI module) we are not restricted by the cooldown timer and this way one an issue as many scaling requests as required. This provides the opportunity to employ predictive algorithms (e.g. Holt-Winters, RNN, ARIMA) in order to predict sharp changes in the system’s load and accordingly undertake better performant scaling decisions. As an example, if the scale-out threshold is set to 60 (per VDU) and the OSM_Autoscaler i) sees that the current number of VDUs is three and ii) the predicted total load (by the forecasting algorithm implemented inside OSM_Autoscaler) is 250, the OSM_Autoscaler will decide that two additional VDUs (remember that we have 3 VDUs supporting max 180 total load, thus with a predicted total load of 250 we shall need 3+2 VDUs supporting total load 5×60=300 which serves the requirements of the prediction of 250) are needed to support the raising load more efficiently (so that all VDUs have a load below the defined threshold of 60). Therefore, the OSM_Autoscaler trigger two scale out operations to cope with the phenomenon of the load increase. Analogous advantages are offered by the OSM_Autoscaler upon load decrease phenomena, where by our solution ‘shuts down’ faster unnecessary VDUs, saving energy to the NFV cloud provider.

The following subsection provides the high level architecture of our OSM_Autoscaler. The technical details of its implementation are described in detail in Deliverable D2 “Implementation report and operation plan”.

Architecture of OSM_Autoscaler

Architecture overview

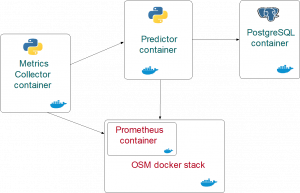

The following diagram presents the architecture of our predictive autoscaler. As we can observe, it adds predictive functionality to OSM through the implementation of three main containters: i) the Metrics Collector container, ii) the Predictor container; and, iii) the PostgreSQL container. For a detailed description on the functionality of each container, the reader may refer to Deliverable D2.

Summary of information for publication on the 5GINFIRE website

Goals:

To integrate our predictive autoscaler into the Open Source Mano, leveraging the VNF performance metrics generated by the OSM monitoring module. The retrieved metrics shall feed our predictive autoscaling engine that will generate short-term predictions for the selected CPU load metric. The combined metric predictions will be mapped to corresponding SCALE_IN/SCALE_OUT actions for the corresponding VNFs. The actions will trigger into the NFV layer the corresponding VDU provisioning or decommissioning calls. During this process, several system and application level metrics will be recorded in order to compare various approaches to autoscaling (reactive/stock autoscaler vs proactive/predictive autoscaler, ARIMA vs Holt Winters based forecasts). Our implementation is committed to 5GINFIRE’s code repository for further use by other experimenters while the user manual is provided in 5GINFIRE’s WiKi.

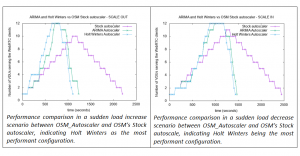

Example Results:

Conclusions:

The forecast models implemented in OSM_Autoscaler are effective in deciding appropriate values of the scaling-in or the scaling-out step. The configuration of OSM_Autoscaler with the Holt-Winters algiorithm has been proven as the best performant in the majority of scenarios we emulated during our experimentation.

Overall, this project provided to Modio with significant knowledge which will be capitalized during the development of a new engine for predictive autoscaling, intended to be marketed through a licensing scheme in order to enhace the functionality of commercial NFV cloud products. To that end, Modio (with the help of the 5GFIRE consortium) has joined ETSI OSM group as a participant (https://portal.etsi.org/TB-SiteMap/OSM/List-of-OSM-Members) and is aiming at a Proof of Concept project.